Can AI technologies increase your business’s vulnerability to anti-discrimination lawsuits?

Proposed legislations regulating AI technologies say an unequivocal yes.

In 2022, the EEOC reported 73,485 lawsuits filed against businesses. if HR leaders aren’t careful, AI involvement in recruiting can raise that number.

Industry leaders like Sam Altman, Geoffrey Hinton, and Timnit Gebru have voiced concerns about how bias and discrimination can be trained into AI.

This article will define the possible legislative minefield HR teams must navigate to avoid having their AI instruments become diversity, equity, and inclusivity (DEI) or privacy law liabilities.

A solid grasp of what lawmakers have drafted (e.g., The EU AI Act and AI Bill of Rights) to regulate AI technologies can help prepare HR leaders on which AI-driven products will comply with their state and federal regulatory practices.

Generative AI, DEI, and privacy regulations

Can generative AI comply with current DEI and privacy regulations?

AI adoption into the field of HR has always been difficult due to the sensitive data HR professionals work with regularly.

The challenge of employing AI in HR is that the nature of employee relations, workplace investigations, and managing company culture problems are highly contextual and interpreted on a case-by-case basis.

The advent of generative AI instruments like ChatGPT and Bard bridges this gap with their ability to interpret conversations and generate human-like responses, allowing them to learn directly from HR case examples.

Generative AI chatbots can automate HR support cases by learning from each employee-HR interaction to improve future responses.

Personal data like social security numbers, birthdays, demographics, health records, etc., are also challenging because the Privacy Act and HIPAA protect them. AI instruments that analyze these data types are under the same scrutiny as HR professionals to ensure they comply with federal and state laws.

HR compliance software helps businesses understand complex and ever-changing state and federal compliance legislation.

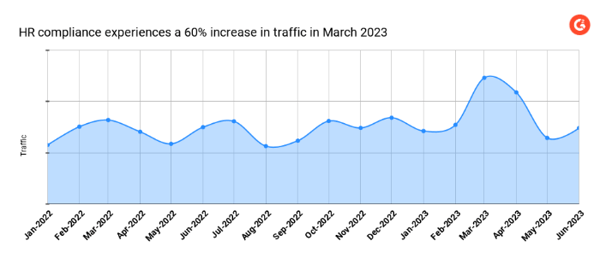

With the focus on generative AI, the HR Compliance Software category on G2 experienced a 60% increase in traffic in March 2023.

Existing legislation around AI

Even before the generative AI craze was triggered, industry experts were already warning consumers about AI being too biased for real-world application.

In the US, two states have already passed state legislation to reign in misuse and quality control of AI in recruitment technologies like automated employment decision tools, which automate recruitment and hiring (AEDT, aka talent intelligence), and video interviewing.

New York City passed legislation that mandates auditing AEDT software for biases across demographics like sex, race, and ethnicity.

Illinois has passed the Artificial Intelligence Video Interview Act, which requires companies to notify applicants that their video recordings will be analyzed by an AI, acquire consent before the recording, and describe what kinds of characteristics they will be measured on.

In the EMEA and APAC regions, regulations about AI in HR are indirectly impacted by broader regulatory actions around privacy, like the General Data Protection Regulation (GDPR) for the EU and the Digital Personal Data Protection Bill (DPDPB) for Indian businesses.

AI requires oversight

More US state lawmakers say no, AI can’t comply with DEI and privacy regulations without oversight.

The US is at the forefront of developing innovations in the AI space and is also at the forefront of implementing regulations to reign in the misuse of AI instruments. Congress hasn’t responded yet, but there have been rumblings at the federal level from the White House on what kinds of regulations they would like to see passed.

At the state level, the response has been more robust, if a little eccentric.

In 2023, 20 out of the 86 proposed state laws about AI regulation have focused on AI involvement in HR business functions.

Legislations of interest:

- North Dakota enacted the Personhood Status amendment, establishing that artificial intelligence cannot have personhood as a legal status.

- Massachusetts introduced a Preventing a Dystopian Work Environment Act to protect employees from AI contributing to excessive surveillance, algorithmic hiring, and privacy concerns. Vermont is also pending Electronic Monitoring, which has similar regulations.

- CA, DC, IL, and NJ are all pending legislation similar to NY’s law for auditing AEDT tools.

What's happening in the EU?

The EU Artificial Intelligence Act classifies AI-driven HR software as high-risk.

The EU is responding at the federal level and explicitly states which responsibilities of HR functions are to be protected from poor AI implementation.

Any AI decision making involved in the professional longevity and prosperity of EU citizens is to be heavily scrutinized.

“AI systems used in employment, workers management and access to self-employment, notably for the recruitment and selection of persons, for making decisions on promotion and termination and for task allocation, monitoring or evaluation of persons in work-related contractual relationships, should also be classified as high-risk.”

This proposed Artificial Intelligence Act draft goes so far as to explicitly classify AI technology involved in initiating, promoting, or terminating employees as “high-risk AI systems.” The authors justify this attention by pointing out how these systems can,

“ . . . perpetuate historical patterns of discrimination, for example against women, certain age groups, persons with disabilities, or persons of certain racial or ethnic origins or sexual orientation. AI systems used to monitor the performance and behavior of these persons may also undermine the essence of their fundamental impact on their rights to data protection and privacy.”

The language in this document does not specify the types of AI technologies and what constitutes an AI system. It provides no details on the regulatory body that will audit these technologies and no information on possible repercussions for violations.

If anything, this is legislation at the federal level and will lead to the founding of numerous regulatory agencies and local government regulations.

By classifying AI systems involved in HR business functions as high-risk, HR departments must take extra care when adopting these new technologies into their tech stack.

HR leaders must proceed with caution

Introducing sophisticated AI systems into HR workflows and tech stacks substantially benefits productivity, efficiency, and innovation.

However, HR teams need to manage a delicate balance between strict legal compliance with a rapidly evolving regulatory environment and maximizing the benefits AI offers to their employees.

HR teams can maximize the benefits of AI systems with a minimum amount of risk by following the expertise provided by HR compliance software.

Moving forward, the EU Artificial Intelligence Act and the White House AI Bill of Rights are useful guidelines for anticipating which HR business functions will experience additional scrutiny from lawmakers.

These documents have set an expectation and standards for future regulatory actions by lawmakers worldwide.

Learn more about how your HR team can navigate the ethical considerations of using AI.

Edited by Shanti S Nair

by Jeffrey Lin

by Jeffrey Lin

by Jeffrey Lin

by Jeffrey Lin

by Jeffrey Lin

by Jeffrey Lin