Hugging Face, a startup that helps businesses build, train, and deploy state-of-the-art machine learning models, announced its Series C funding on May 9, 2022, raising $100 million. Lux Capital led this round, adding Hugging Face to its over 40 investments in the artificial intelligence (AI) and machine learning (ML) space. With this funding, Hugging Face joins the double unicorn club with a valuation of $2 billion.

Hugging Face fits squarely into G2’s Natural Language Understanding (NLU) software category. These products help businesses with part-of-speech tagging, automatic summarization, named entity recognition, sentiment analysis, and emotion detection.

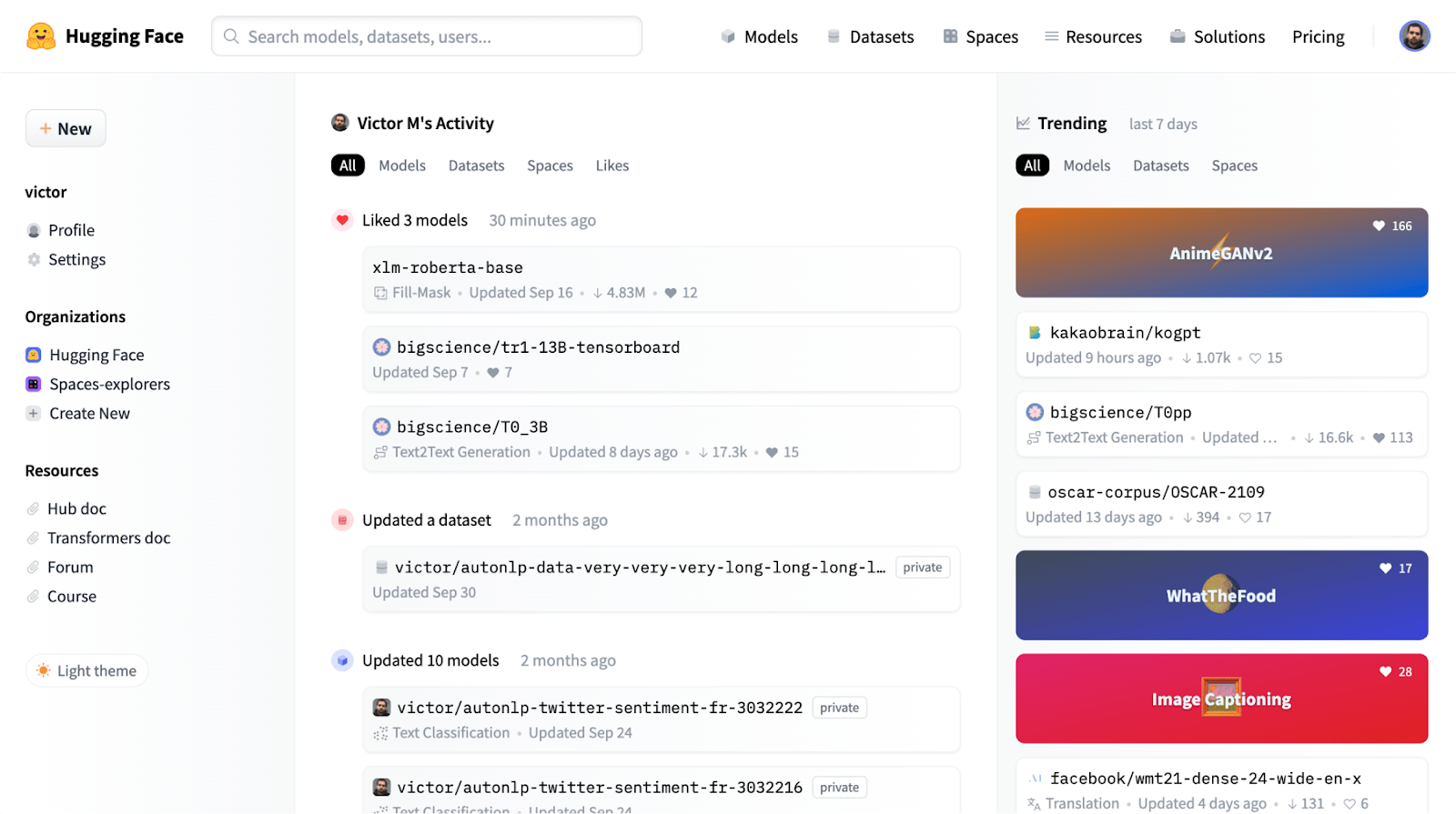

With the company's development, Hugging Face has moved beyond NLU capabilities, expanding into the broader ML space, branding itself as “the AI community building the future.” On its platform, one can connect and collaborate on AI as well as find data, models, and libraries for the deployment of ML models.

Why does Hugging Face matter?

More than 5,000 organizations use Hugging Face, including Allen Institute for AI, Meta, Google, AWS, and Microsoft. Its product includes a home for machine learning, which allows data scientists to create and collaborate on ML better. This is possible due to Hugging Face’s catalog of models, ranging from image classification to summarization to translation.

Source: Hugging Face

A significant component of the company’s platform is its natural language processing library called Transformers. According to Hugging Face:

”Transformers provides APIs to easily download and train state-of-the-art pretrained models. Using pretrained models can reduce your compute costs, carbon footprint, and save you time from training a model from scratch.”

Hugging Face provides a freemium model where buyers can use its inference API (providing plug-and-play ML) at limited capacity along with free community support. Its paid tiers allow easy model training, improved performance for inference API, and more.

The Hugging Face platform is rooted in open source, with its Transformers library on GitHub having 62,000 stars and 14,000 forks. At its core, this means that the developer and data science community is actively using and improving upon Hugging Face’s technology. Software sellers are leveraging open source to build their technology and develop communities around their products.

NLU technology is on the rise

On G2, we are seeing just how hot NLU technology is. Last month, we noticed that the Natural Language Understanding (NLU) software category traffic was on the rise. This is in contrast with the beginning of the year, in which we saw a dip in traffic, with a 25% traffic dip in March. We believe that this spike is due to large AI conferences in April, such as World Artificial Intelligence Cannes Festival, as well as large funding rounds for companies like Zhiyi Tech ($100 million) and Observe.ai ($125 million). We view this as indicative of business buyers’ thirst for this technology.

The increase in traffic should come as no surprise. As businesses gather more text data (emails, documents, and reviews), they are looking for ways to understand it and mine it for meaning. With models from providers such as Hugging Face, businesses can have a competitive advantage, deploying AI quickly to achieve business success.

Edited by Shanti S Nair

by Matthew Miller

by Matthew Miller

by Matthew Miller

by Matthew Miller

by Matthew Miller

by Matthew Miller