This post is part of G2's 2024 technology trends series. Read more about G2’s perspective on digital transformation trends in an introduction from Chris Voce, VP, market research, and additional coverage on trends identified by G2’s analysts.

In an increasingly automated world, security software will focus on manual human actions

Prediction

Security solutions will protect organizations through human behavior, not AI capabilities, in 2024.

Despite the excitement of generative AI and all its powerful capabilities, security software products will hone in on human behavior as the crux of security concerns. Security products remain a hold-out, possibly due to the unknown security and privacy risks associated with ChatGPT and GPT-4 technology.

While non-security products will continue to automate work by focusing on removing human actors, security product developers’ skepticism of this powerful, new technology will create a divergence between security and non-security products.

Across G2’s security product categories, there has been a noticeable absence of products integrating ChatGPT and GPT-4 technology. On the contrary, security software vendors on G2 have expressed their desire to refocus their product messaging on human behavior.

Ultimately, this messaging will result in people within organizations being responsible for identifying security risks to prevent data leaks, data breaches, and other security risks.

An increasing number of reviewers achieve ROI at a progressively faster rate

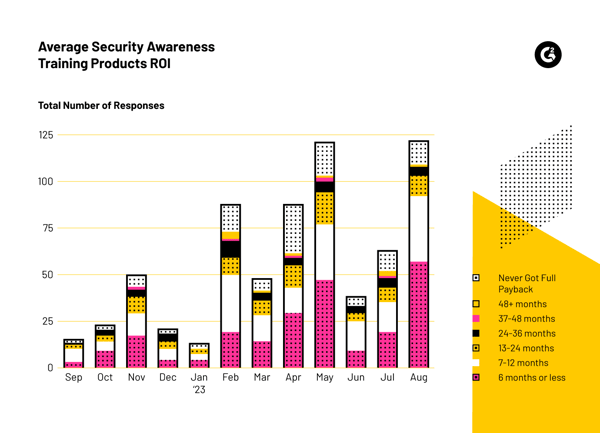

Across all products between September 1, 2022, and August 31, 2023, there were a total of 2,541 new reviews added to G2’s Security Awareness Training category. Of those reviews, 667 provided data on their organization’s ROI after purchasing and using products that better equip staff to recognize and mitigate security threats against their organizations.

End users left over half of all reviews, including those without ROI responses, after March 1, 2023. The majority of responses that reviewers felt compelled to include ROI data with were recorded after May 1, 2023—just within the last four months over the represented 12-month period.

This figure represents ROI for less than six months to more than four years, with 13% of respondents stating they never got a full ROI in products meant to train their employees to be prepared for various cybersecurity threats against their workplaces, including phishing attacks and malware downloads.

From this data, one can infer that an increasing number of workplace security teams have recognized the importance of human behavior in combating security risks recently.

As the total number of reviews within the category has increased, the percentage of responses claiming never to receive an ROI has fluctuated. This may be due to the preventative nature of the products, making it difficult to measure whether a lack of security threats is actually because of the training’s effectiveness or external factors.

Regardless, one thing remains certain—the number of workplaces investing in these products continues to trend upward.

Despite automation, people remain the biggest security threat and greatest security defense

Employees’ laptops and smartphones are still some of the most prominent attack surfaces malicious actors can exploit. Phishing attacks, ransomware downloads, data exfiltration attempts, data tampering, and numerous additional risks will persist and perhaps escalate in scale with the power of AI in the wrong hands.

Without proper training, employees will remain one of the single biggest risks to any enterprise’s security.

As ChatGPT and GPT-4 technology continue to evolve rapidly, security products will remain averse to integrating their capabilities into their design due to their unknown risks. As the data suggests, security products will instead run in the other direction and focus on human behavior as one of the greatest forces available to combat risks to propriety and sensitive data.

Products created to thwart security threats will leverage human behavior as the ultimate defense that organizations must improve and rely on.

Learn more about the security challenges of using generative AI and how to prevent them.

Edited by Jigmee Bhutia

by Brandon Summers-Miller

by Brandon Summers-Miller

by Brandon Summers-Miller

by Brandon Summers-Miller

by Brandon Summers-Miller

by Brandon Summers-Miller